Eden McEwen

Part 1: Nose Tip Detection

First, We take the Danes dataset and extract the nose point with the following:

- Show samples of data loader (5 points)

- Plot train and validation loss (5 points)

- Show how hyper parameters effect results (5 points)

- Show 2 success/failure cases (5 points)

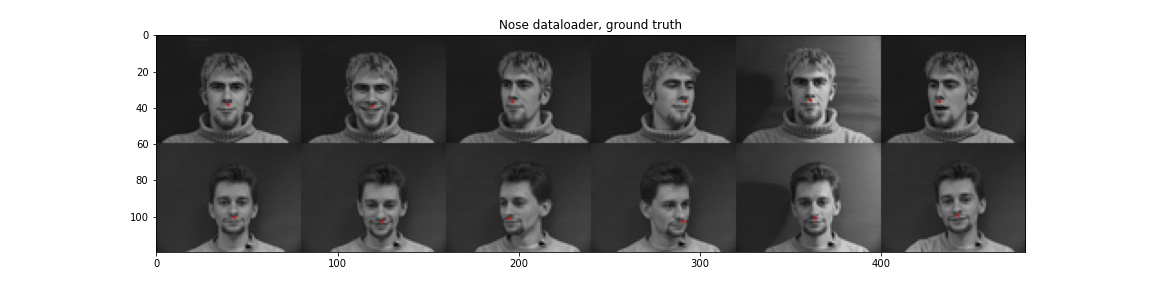

p1.1 Data Loader

The nose data point was extracted from the face dataset, and is returned with the 80x60 image when iterated through the dataset. We define our test and validation set by sending in indexes to cut on, as needed.

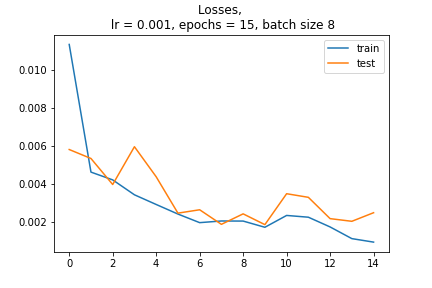

p1.2 Train and validation loss

In my most successful attempt, I use:

- Learning Rate: 0.001

- Batch Size: 8

- Epoch count: 15

After training, we see an average validation loss of 0.0001.

The training and validation loss are as follows:

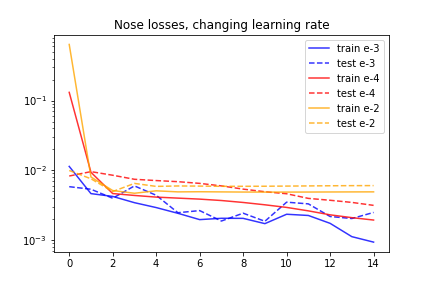

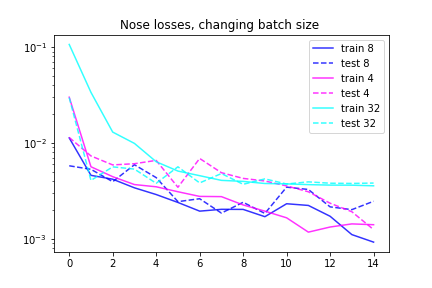

p1.3 hyperparameters

An increase in learning rate (from 1e-3 to 1e-4) shows a gentler slope for training, eventually reaching similar accuracies as our own. However, a decrease in learning rate (from 1e-3 to 1e-2) shows the model reaches a solution too fast, and plateaus after about the 5th batch.

We had a relatively small batch size of 8. Decreaseing this further doesn't seem to change the outcomes much, but increaseing the batch size shows the training set plateauing early.

p1.4 Successes and failures

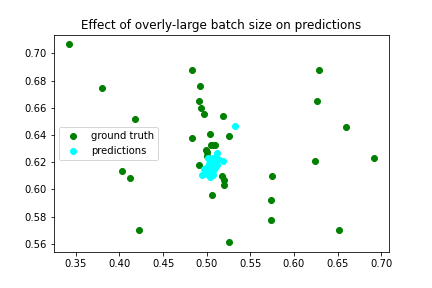

One of the initial failures was the model only predicting the average nose point. This was fixed by decreasing batch size. For an example of what this error looks like, see the outputs of the large batch size test from before:

To fully understand this models strenths and weakenesses, we can look at the output of our leftover validation set. Right away, its clear that the first two rows have significant errors. This may be due to the eccess hair of the woman, where we didn't have as many women to train on, and the smaller head shape of the man. A particular success is the head turn tracking in the second to last row, where the model is able to track the turn of the head, and the second to last image, where the nose is guessed with almost no error.

Part 2: Full Facial Keypoints Detection

Next, we want to train

- Show samples of data loader (5 points)

- Report detailed architecture (5 points)

- Plot train and validation loss (5 points)

- Show 2 success/failure cases (5 points)

- Visualize learned features (5 points)

p2.1 Data Loader

Show samples of data loader (5 points)

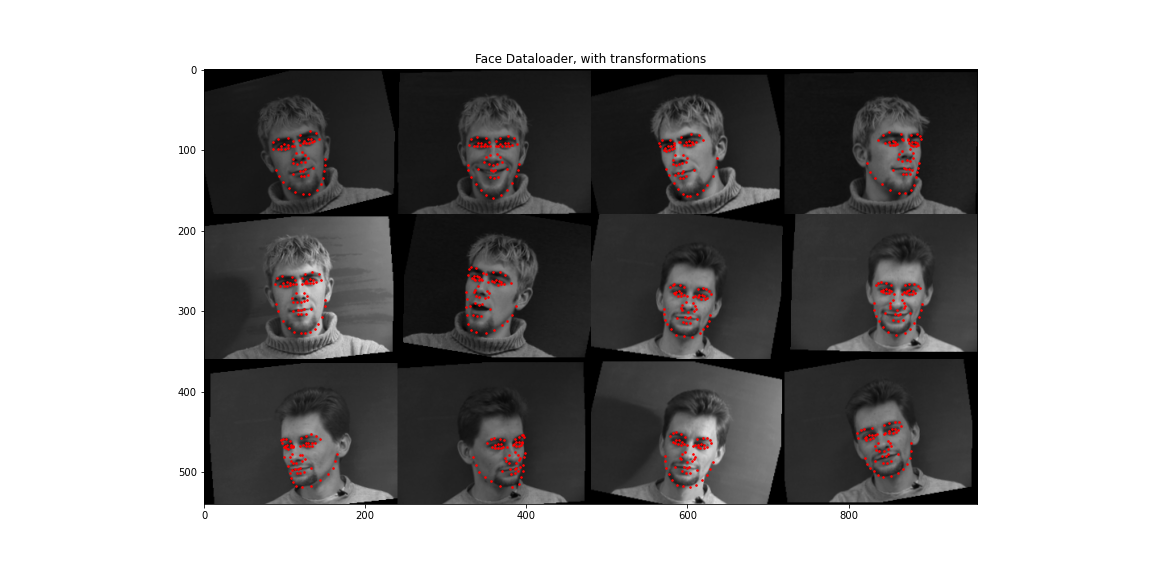

Here we can see examples of our data loader plain. In this implementation I deciced on scaling the images up to 240x180:

And with augmentations:

The augmentations applied were a color jitter, a random angular rotation from 15 to -15, and a random shift of 10 to -10 pixels.

p2.2 Architecture details

Report detailed architecture (5 points)

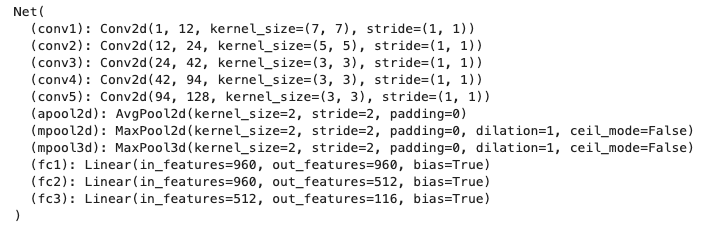

Many, many, many architectures were tried. For most of them, the model returned an average face. I settled on the following architecture was settled on, drawing inspiration from the philosophy of the VGGNet. Each layer has a convolution of increasing size, folowed by a relu, followed by a max pool of kernel size 2 and stride 2. All but the first two kernels are 3x3, the first being larger helepd with training. Finally, there are three fully connected layers.

p2.3 Training and validation loss

Plot train and validation loss (5 points)

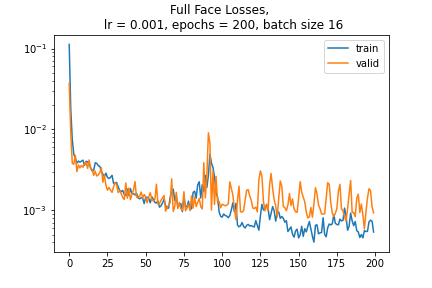

Our model was trained with a batch size of 16, with a learning rate of 1e-3, for 200 epochs. We can see quick learning up to about 75 epochs, then a large jump, and what seems to be overfitting after that. Increasing the batch size could have improved the smoothness of this curve, but it was not felt to be necessary for the final results.

The final validation loss of the network on the 42 test images: 0.00092

p2.4 Successes and failures

Show 2 success/failure cases (5 points)

Plotting some unseen validation images, we can see that the model struggles in cases of thin heads, or large hair, like the first and second subjests. If does identify points fairly well for the forth subject (success in full frontal and side), where it turns well with the model. I believe that the hair for the woman caused confusion in the failure case, as well as beard confusion in the first case.

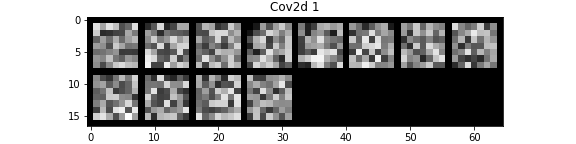

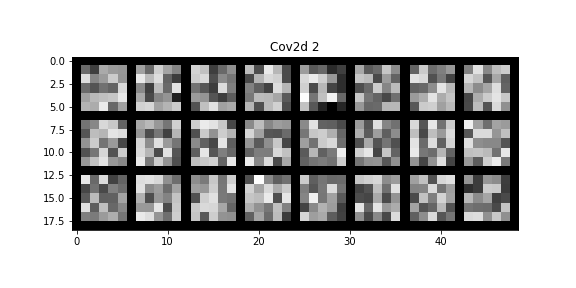

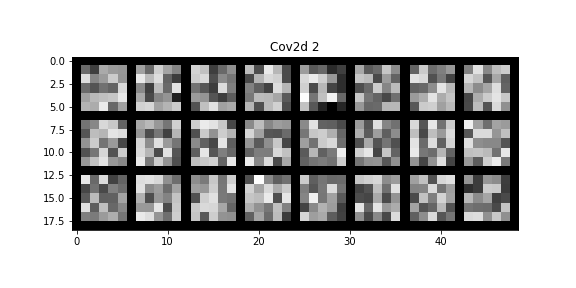

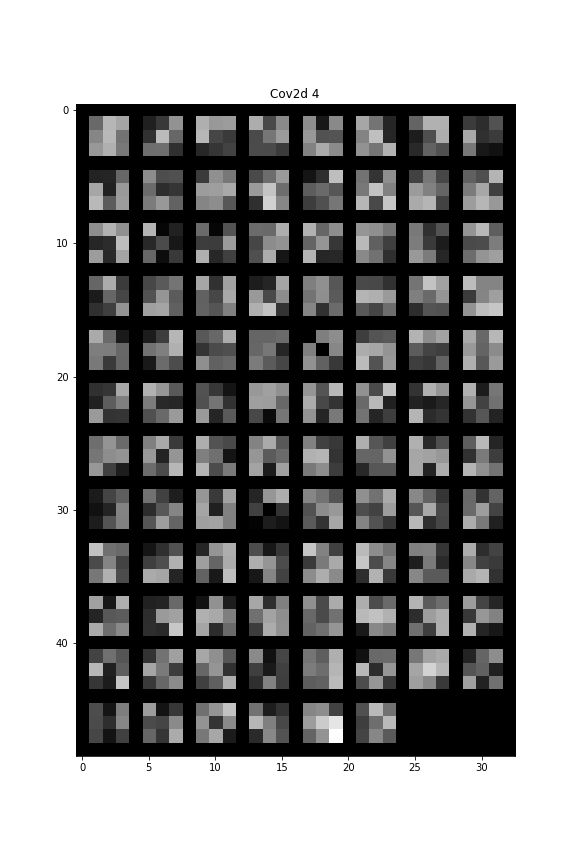

p2.5 Visualize learned features

Show 2 success/failure cases (5 points)

Here, we visiualize the features of our kernels. SOme of the initial larger kernels look like edge detections!

Part 3: Train With Larger Dataset

- Submit a working model to Kaggle competition (15 points)

- Report detailed architecture (10 points)

- Plot train and validation loss (10 points)

- Visualize results on test set (10 points)

- Run on at least 3 of your own photos (10 points)

p3.1 Kaggle Submission

Submit a working model to Kaggle competition (15 points)

Yes I did submit to Kaggle. Under the name EAM. No it was not great.

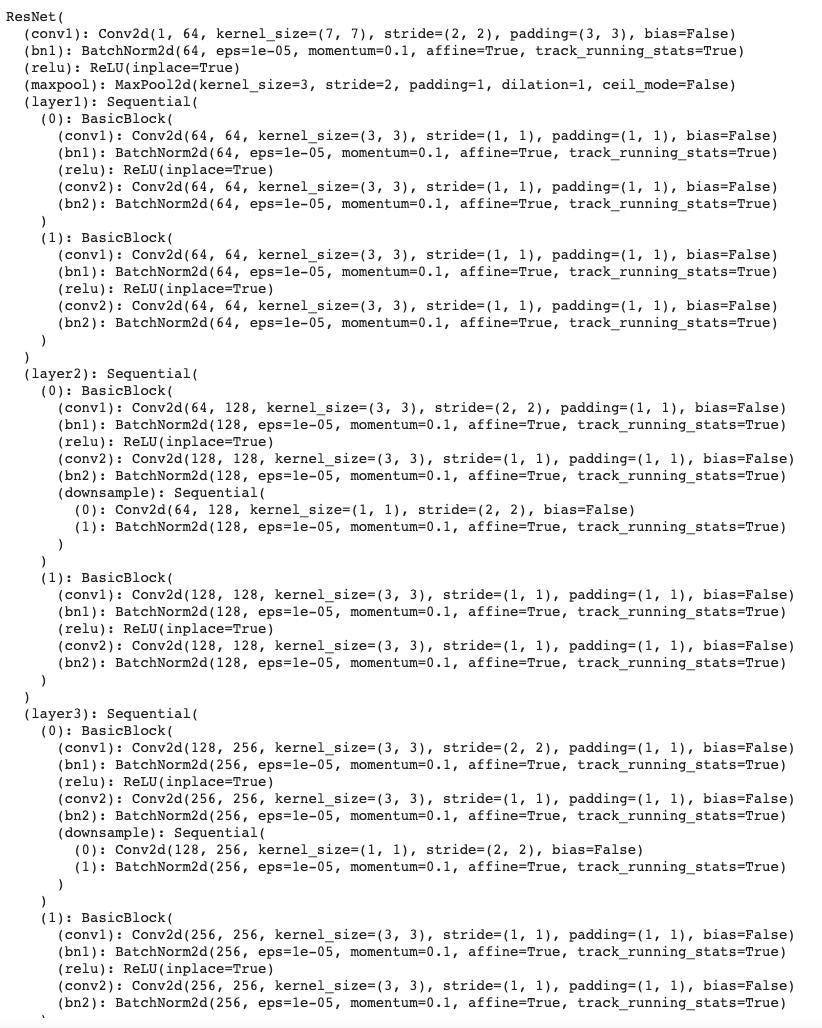

p3.2 Detailed architecture

Report detailed architecture (10 points)

For this section of the project, I trained a REsNet18 with a learning rate of 1e-3 and a batch size of 16 for 12 epochs. I changed the input to accept grayscale, and the output to be out 68*2 features.

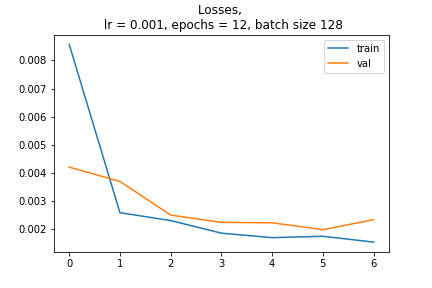

p3.3 Plot and Train validation

Plot train and validation loss (10 points)

Below is a plot of the training for this model:

p3.3 Visualize results on test set:

Visualize results on test set (10 points)

Here is the test set, with some obvious errors:

p3.3 Run on own images:

Run on at least 3 of your own photos (10 points)

I ran out of time for this one but I think it is very cool!